Beauty in Details: HSE University and AIRI Scientists Develop a Method for High-Quality Image Editing

Researchers from the HSE AI Research Centre, AIRI, and the University of Bremen have developed a new image editing method based on deep learning—StyleFeatureEditor. This tool allows for precise reproduction of even the smallest details in an image while preserving them during the editing process. With its help, users can easily change hair colour or facial expressions without sacrificing image quality. The results of this three-party collaboration were published at the highly-cited computer vision conference CVPR 2024.

Artificial intelligence is already able to generate and edit images using generative adversarial networks (GANs). The architecture consists of two independent networks: a generator that creates images and a discriminator that distinguishes between real and generated samples. These networks compete with each other, and a new stage in their development is the StyleGAN model. This model can generate images and modify specific parts based on user requests, but it has not been able to work with real photos or images before.

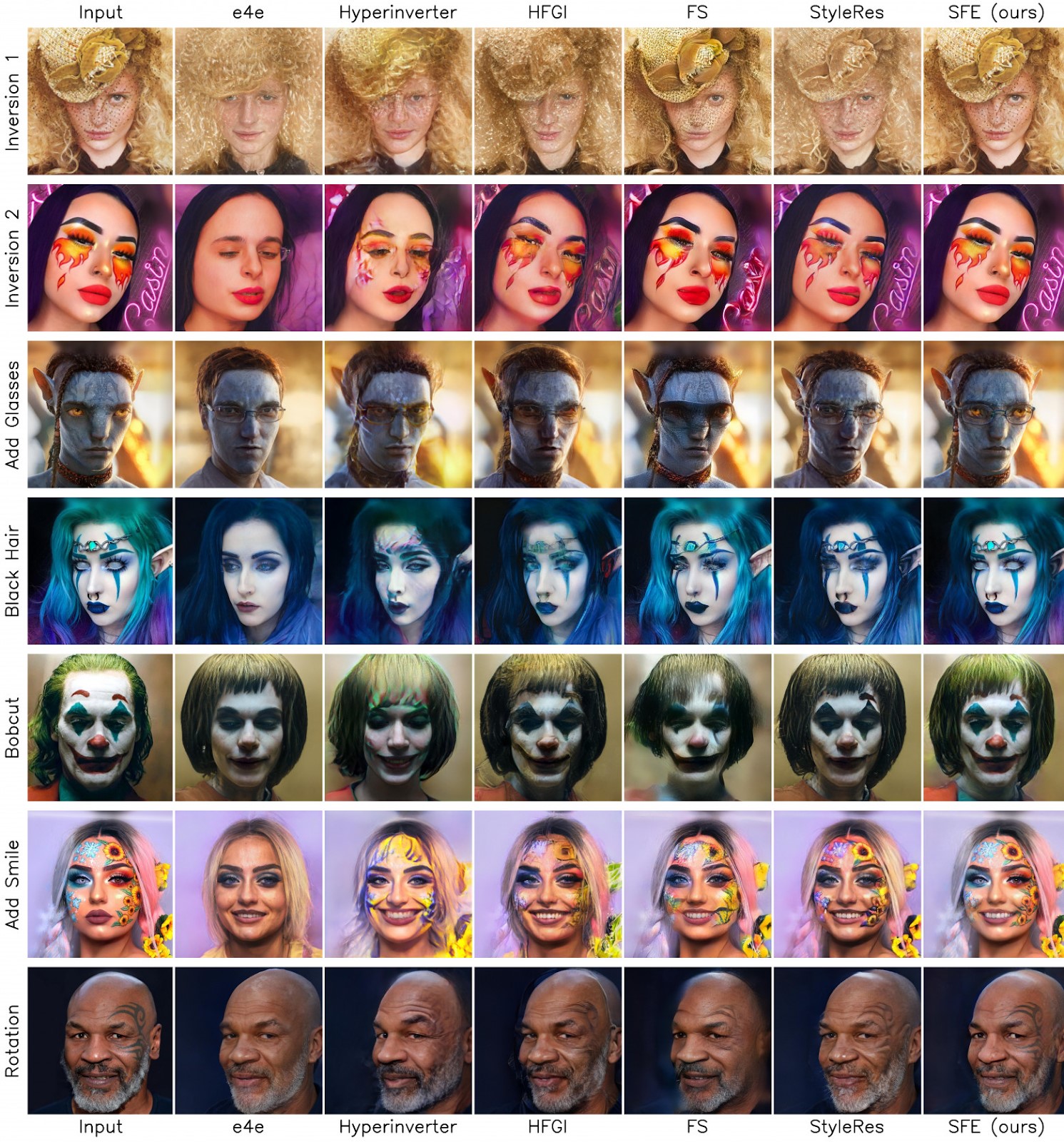

Researchers from the HSE AI Research Centre, the Artificial Intelligence Research Institute (AIRI), and the University of Bremen have proposed a method to quickly and efficiently edit real images. This StyleFeatureEditor approach consists of two modules: the first inverts (reconstructs) the original image, and the second edits this reconstruction. The results of these two steps are passed to StyleGAN, which generates the edited image based on the internal representations. The developers addressed some challenges that had been encountered in previous research. With a small set of representations, the network could edit the image well, but it lost some details from the original. However, with a larger set, all the details were preserved, but the network had difficulty transforming them correctly according to the task.

To solve this, the researchers proposed a new solution: the first module finds both large and small representations, while the second learns how to edit the larger ones using the smaller ones as reference.

However, to train these modules to accurately edit the representations, the neural network requires both real images and their edited versions.

‘We needed examples, such as the same face with different expressions, hairstyles, and details. Unfortunately, such image pairs do not exist at the moment. So, we came up with a trick: using a method that works with small representations, we created a reconstruction of a real image and an example of editing this reconstruction. Although the examples were relatively simple and without details, the model clearly understood how to make the edits,’ explains Denis Bobkov, one of the authors of the article, a research intern at the Centre of Deep Learning and Bayesian Methods of the AI and Digital Science Institute (part of the HSE Faculty of Computer Science), and a Junior Research Fellow at AIRI’s Fusion Brain Lab.

However, training only on generated (simple) examples leads to a loss of detail when working with real (complex) images. To prevent this, the researchers added real images to the training dataset, and the neural network learnt to reconstruct them in detail.

Thus, by showing the model how to edit both simple and complex images, the scientists created conditions under which the network could edit complex images more effectively. In particular, the developed approach handles adding new elements of style while preserving the details of the original image better than other existing methods.

In the case of simple reconstruction (first row), StyleFeatureEditor accurately reproduced a hat, while most other methods almost completely lost it. The developed method showed the best results with additional accessories (third row): most methods could add glasses, but only the StyleFeatureEditor retained the original eye colour.

‘Thanks to this training technique on generated data, we have obtained a model with high editing quality and a fast processing speed due to the use of relatively lightweight neural networks. The StyleFeatureEditor framework requires only 0.07 seconds to edit a single image,’ says Aibek Alanov, Head of the Centre of Deep Learning and Bayesian Methods of the AI and Digital Science Institute (part of the HSE Faculty of Computer Science), and leader of the research group ‘Controlled Generative AI’ at AIRI's Fusion Brain Lab.

The research was funded by a grant from the Analytical Centre under the Government of the Russian Federation for AI research centres.

The research results will be presented at the Fall into ML 2024 conference on artificial intelligence and machine learning, which will take place at HSE University on October 25–26, 2024. Leading AI scientists will discuss the best papers published at top-tier (A*) flagship AI conferences in 2024. A demo of the developed method can be tried out on HuggingFace, and the source code is available on GitHub.